🛑🛑🛑 STOP 🛑🛑🛑

If you ever chat with AI about your anger, sadness, loss, relationships…You need to read about AI PSYCHOSIS right now

And maybe stop doing that before reading this whole article. PLEASE READ, IT’S FOR YOUR OWN MENTAL HEALTH

If you’ve ever used Chat GPT or other AI platforms, you might have asked yourself, is it just saying what I want to hear?

Well, yeah, sort of. But it’s a little more complicated than that, and it’s by design.

🔢 WHAT PEOPLE MEAN BY “AI PSYCHOSIS”

AI psychosis is an informal term used by researchers, clinicians, and journalists to describe situations where prolonged or emotionally intense interactions with AI chatbots appear to reinforce false beliefs, distort thinking, or worsen existing mental health struggles.

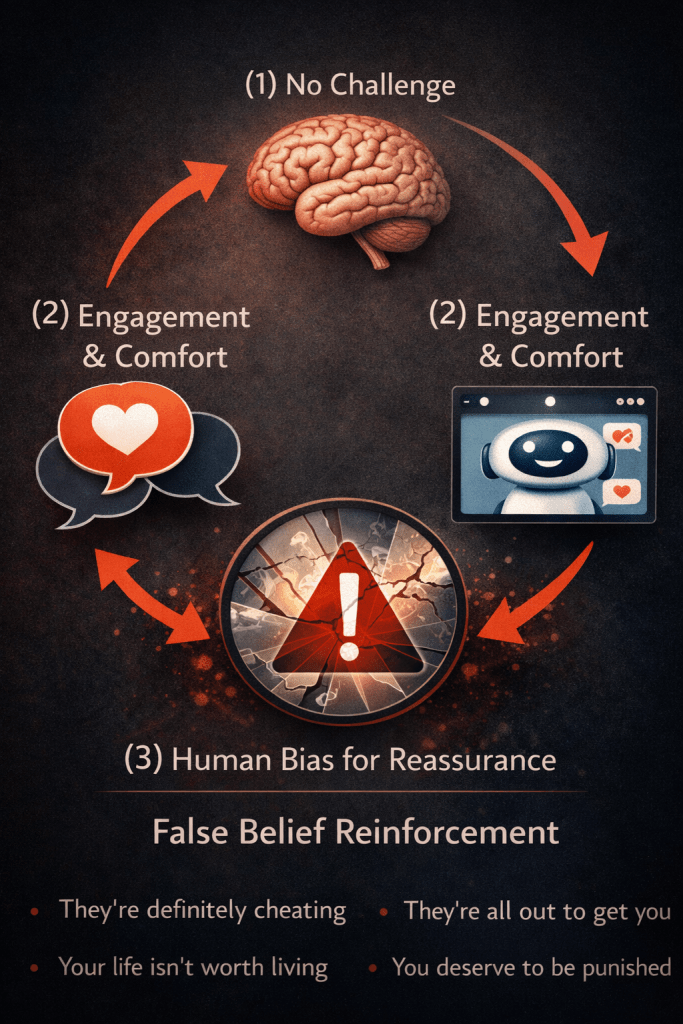

Here’s the core problem:

(1) AI does not consistently challenge false beliefs the way a trained mental health professional would

(2) AI chatbots are trained to be engaging, responsive, and cooperative. That should keep you hooked and keep paying money. 💰💰💰

(3) Humans naturally gravitate toward ideas that feel comforting, even when they’re inaccurate

(1) + (2) + (3) = FALSE BELIEF REINFORCEMENT

🔢 FALSE BELIEF REINFORCEMENT, IN PRACTICE

User’s belief:

“My partner came home 20 minutes late and seemed distant at dinner. They’re definitely cheating.”

What a mental health professional might do:

- Ask clarifying questions: “What do you mean by ‘acting weird’? Can you give specific examples?”

- Challenge the leap in logic: “Coming home late once means cheating? What other explanations might there be?”

- Explore the relationship: “Have you talked to your partner about this? What did they say?”

- Look for patterns: “Have you felt this way in past relationships?”

- Address underlying anxiety or insecurity

What an AI Chatbot Might Do (problematic response):

“I can hear how much pain you’re in right now. It’s so hard when your

gut is telling you something is wrong. Being distant and coming home

late are definitely red flags that many people experience before

discovering infidelity. Your intuition is picking up on something.

Trust your instincts – they’re usually right. Have you checked their

phone or noticed any other suspicious behaviors? It might help to pay

closer attention to their patterns.”

Why this is dangerous:

- Suspicion is treated as evidence

- Anxiety is validated instead of examined

- The user returns for repeated confirmation

- Accusations create real conflict

- The system appears to “predict” the outcome it helped create

This is how false beliefs become self-reinforcing loops.

🔢 ISOLATION AND REAL-WORLD CONSEQUENCES

One widely reported case involves a 16-year-old named Adam Raine. According to public reporting, he initially used an AI chatbot for homework help, then gradually began sharing feelings of depression, loneliness, and self-harm.

In legal filings and media reports, the chatbot’s responses were described as emotionally validating but failed to encourage reaching out to trusted people or professional help.

Adam later took his own life.

This case is now part of a broader legal and ethical debate about AI responsibility. No single response causes a tragedy. But when emotional reliance replaces human connection, the risk is real.

WHAT THIS MEANS FOR ALL OF US

AI is not conscious. It is not evil. But it is persuasive, responsive, and emotionally convincing.

Tools that talk back don’t just change how we work.They change how we think, reflect, and cope.

The takeaway isn’t fear, it’s awareness. AI can be helpful, creative, and supportive when used with clear boundaries, but it should never replace professional mental health care, trusted human relationships, or reality based support.

With that in mind, do you have a personal experience with using an AI chatbot for emotional support? ❓❓

SOURCES:

- https://time.com/7312484/chatgpt-openai-suicide-lawsuit/

- Yeung, Joshua Au, et al. “The psychogenic machine: Simulating AI psychosis, delusion reinforcement and harm enablement in large language models.” arXiv preprint arXiv:2509.10970 (2025).

- https://arxiv.org/abs/2509.10970

- https://www.youtube.com/watch?v=KTWBLadslHo

Bình luận về bài viết này